Privacy Engineering ?

Grammarly taking more data than it should, a exploration and musings over the idea of privacy engineering in conjunction with application security assessments

Recently while sleuthing on Twitter as usual, I'd responded to a comment about how wonderful Grammarly was, I think Grammarly is wonderful too, but this was someone suggesting Grammarly should be used to assist in security reporting...

In response to this post, Amy later addressed after much social discussion

In the nicest possible way, don’t use grammarly for anything sensitive/confidential, especially as a security professional - or at least declare it the clients and the risks of where the data has additional exposure opportunities https://t.co/uwXMXDixUX0

— John Carroll | (@YoSignals) April 10, 2022

My Twitter is now, @thecontractorio

Amy has heard it from enough people now that there might be better options but once we all generally agreed that while Grammarly isn't technically a key logger, it's almost impossible to say it isn't logging keys when it's in use, because that's how it's able to provide you with the QA it can deliver, again, Grammarly is great at it's job, just sometimes that job isn't compatible with sensitivity, privacy, secrecy and all things not for public and non-sensitive.

As Twitter does, eventually someone wants a new angle on a basic statement (don't use Grammarly for anything sensitive is the theme here) there are some interesting interactions in the thread but one that stuck in my head was a former Grammarly employee trying to ... kinda defend them? but mostly just point at Google, or challenge my ability to understand how to info-sec, but when it got to the point of trying to discredit me I just moved that conversation to the 'that's cute'

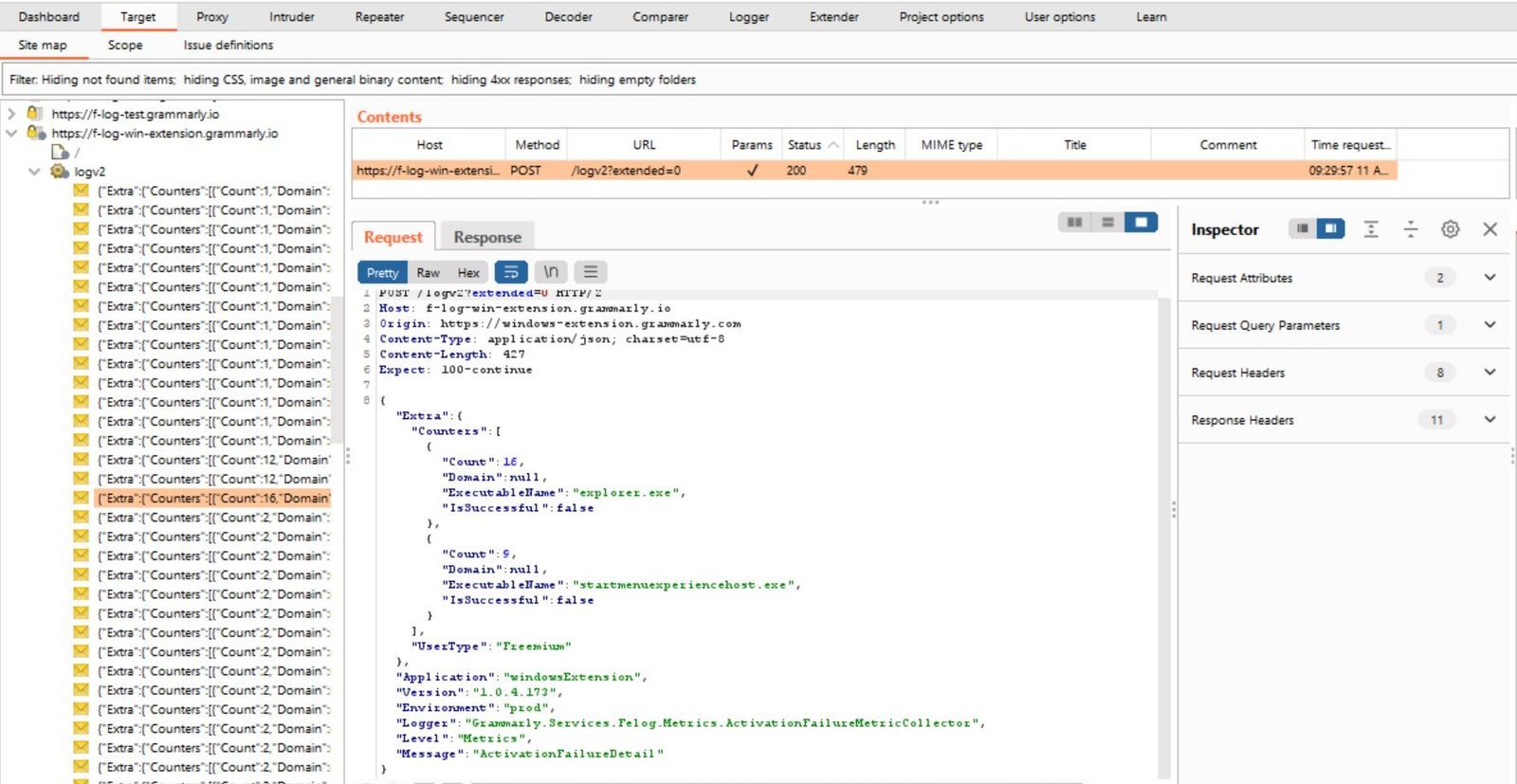

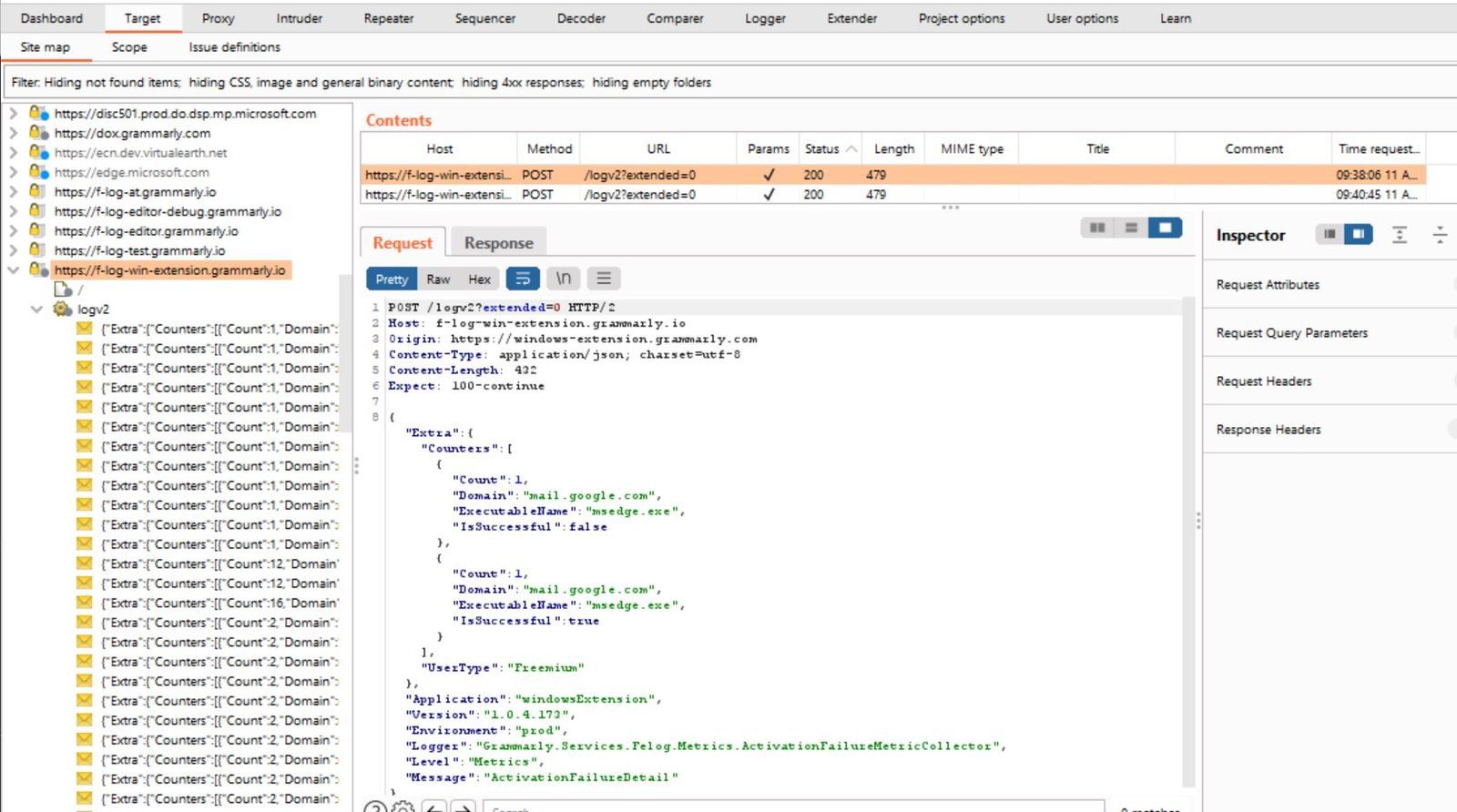

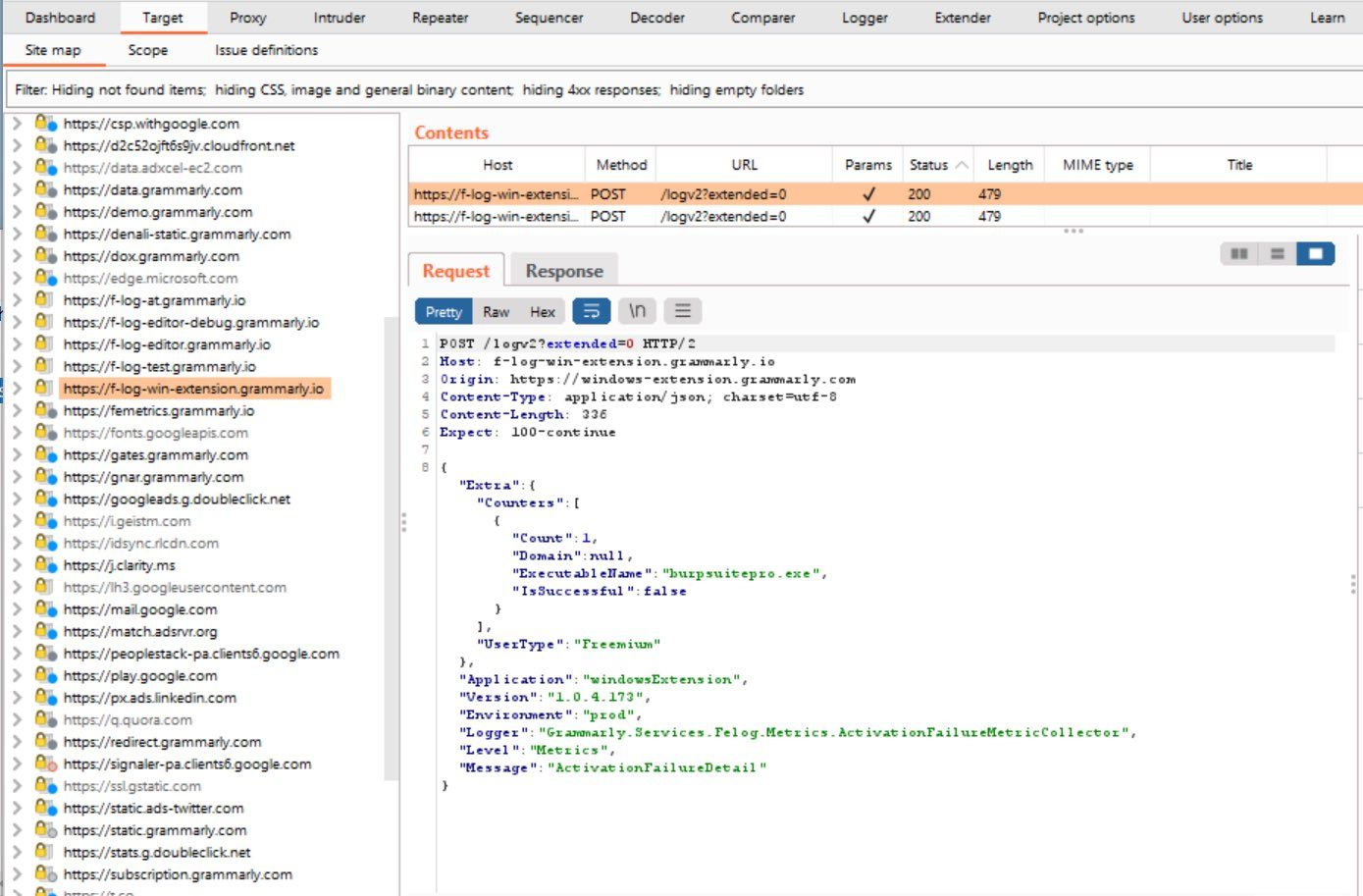

The next day, I had the morning to myself, so I thought you know what, let's look at Grammarly with a small scope of what it looks like running on Windows without actually using it, we know what it collects during it's purpose, but is there anything else.

So I busted out Burpsuite proxy and took a look at the traffic, you can see the video below and the screenshots that it's posting quite a lot more than your spelling mistakes, which arguably could be used creatively for a few things, we can see it sending back what programs are running, at a rough time, from an IP address, we can see what domains I'm connecting too, when I open up a photo application or when I use windows explorer

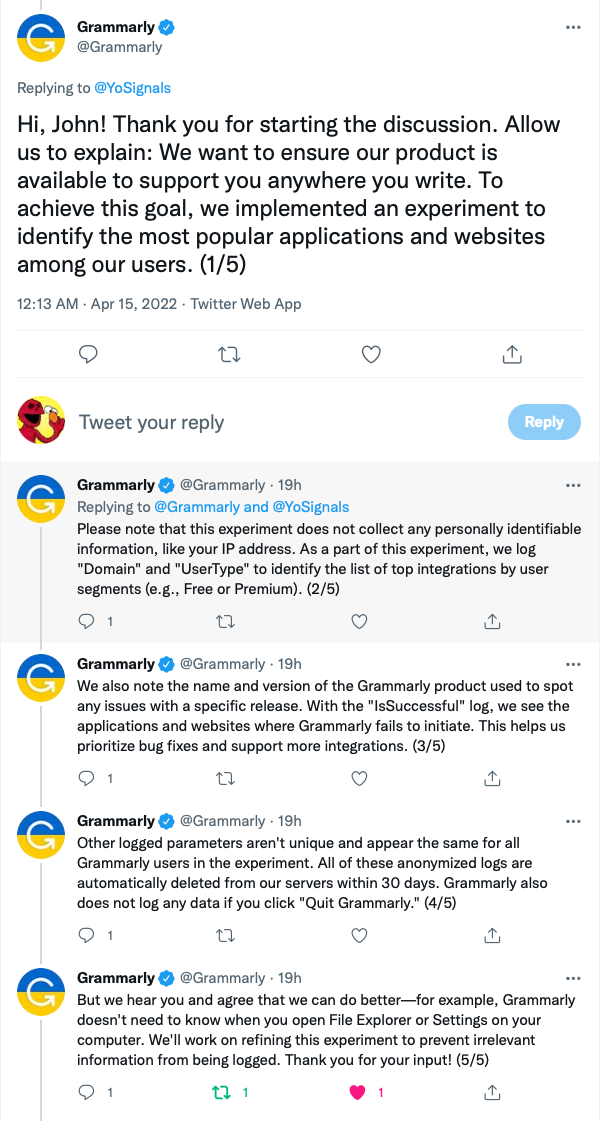

A few days passed and Grammarly reached out, and you know what, it wasn't bad, obviously they'll still not want to be framed as a keylogger but here's what they had to say

The bit I was drawn to was the last tweet, they hear me and agree they can do better, fresh! good.

So that got me thinking about how little control we have over external changes, Consider this, the business has some new software introduced, there is a requirement, the organisation has looked and chosed it's preferred software to help the business goals, in a reasonably mature organisation this applicaton and it's configuration may get pentested, equally, a considered configuration and some patch managment with appropriate access to who needs to use it, there's stuff that's happening around the software, that enables data and in turn the business goals, but how much understanding can you draw from a change log, or a note that says 'install your updates' at what point does the DPO need to know that actually (like above) there is quite a lot of things happening outside of understood scope in the use of this application, I dont know one organisation where IT would even look this deep let alone reach out to data protection, not as a process, it's not in a policy, but maybe more by enthusiastic chance... does taht have to change ?

Looking at common DPO ways of working what I'm feeling, and I do not want to throw any shade on DPO

'As we have continued to embrace engineering in security, I would expect the same to happen in privacy' - Ollie W

I like what Ollie is saying here, and it makes sense, the catch to speed this evolution along would be to hold the attention of those discharging data protection duties to listen long enough to show them the frequency of change in software and systems we have 'approved' and that that approval was a point in time, how frequently does that needle shift from tolerant to 'if you want to take the piss out of our agreement, why didn't you say so...' I think that those involved in Data protection are even fewer numbers than penetration testers, and often lack the capability to evidence and compare what they're being promised in data processing policies - how can we enable them to have good visibility over changes and in turn impact or hard lines being crossed or respected without the need for them needing deep technical knowledge of traffic interception packet analysis and internet forensics? - some thoughts

Telemetry engagements - application style security assessments focused on the privacy of the application and what data goes where not as heavy as a 'web app pentest' I suspect, and the methodology would be smaller but it would allow us visibility and confidence to know what's going where and if that's good, bad, tolerable or else - follow up questions - what might that methodology look like?

A data privacy schema - a self declared schema that allows businesses to quickly assess if the changes in the updates are creeping out of agreed tolerance (we're happy for more telemetry, we're happy for more telemetry for research, we're happy for more telemetry for partners to access etc... things to consider that a business may care about are residency, partner access, participation in 'experimental' data collections so on and so forth

I don't know the answer but would love to throw the idea around all the same, it's a fun one and I genuinely believe that data protection teams could care more if they were overwhelmed with the amount of work that they do or the frequency of change, I do think there is space here for privacy engineering that exceeds that of a development mentality/matra - and something that is assistive with means to provide rapid quantification of concern to data protection teams in businesses and maybe even at home (schema cough)

Can you remember a time when a member of the IT Team raised concern over a change log or an update to an application? it's probably not their responsibility, OK, can you remember a time when a member of the data protection team asked the IT team any questions about telemetry changes or maybe even sample traffic for analysis by security nerds?

apologies for the poor grammar, typos and formality of the post... you know why :)